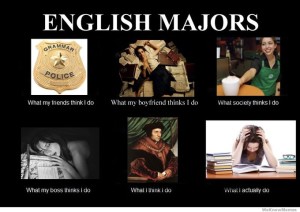

In a characteristically lively and thoughtful post, Katie Allen looks at some articles about computer programs that automate the evaluation of student writing. She eloquently expresses a concern that many in the humanities, myself included, share about the use of machines to perform tasks that have traditionally relied on human judgment. “Those of us who study English do so because we recognize literature to be an art form, and because we believe in the power of language to give shape to the world,” she writes. A computer can run algorithms to analyze a piece of writing for length and variety of sentences, complexity of vocabulary, use of transitions, etc., but it still takes a trained human eye, and a thinking subject behind it capable of putting words in context, to recognize truth and beauty.

Yet if we’re right to be skeptical about the capacity of machines to substitute for human judgment, we might ask whether there is some other role that algorithms might play in the work of humanists.

This is the question that Stephen Ramsay asks in his chapter of Reading Machines titled “An Algorithmic Criticism.”

Katie’s post makes Ramsay sound rather like he’s on the side of the robo-graders. She writes that he “favors a black-and-white approach to viewing literature that I have never experienced until this class… . [He] suggests we begin looking at our beloved literature based on nothing but the cold, hard, quantitative facts.”

In fact, though, Katie has an ally in Ramsay. Here is what he says about the difference, not between machines and humans, but more broadly between the aims and methods of science and those of the humanities:

… science differs significantly from the humanities in that it seeks singular answers to the problems under discussion. However far ranging a scientific debate might be, however varied the interpretations offered, the assumption remains that there is a singular answer (or set of answers) to the question at hand. Literary criticism has no such assumption. In the humanities the fecundity of any particular discussion is often judged precisely by the degree to which it offers ramified solutions to the problem at hand. We are not trying to solve [Virginia] Woolf. We are trying to ensure that the discussion of [Woolf’s novel] The Waves continues.

Critics often use the word “pattern” to describe what they’re putting forth, and that word aptly connotes the fundamental nature of the data upon which literary insight relies. The understanding promised by the critical act arises not from a presentation of facts, but from the elaboration of a gestalt, and it rightfully includes the vague reference, the conjectured similitude, the ironic twist, and the dramatic turn. In the spirit of inventio, the critic freely employs the rhetorical tactics of conjecture — not so that a given matter might be definitely settled, but in order that the matter might become richer, deeper, and ever more complicated. The proper response to the conundrum posed by [the literary critic George] Steiner’s “redemptive worldview” is not the scientific imperative toward verification and falsification, but the humanistic propensity toward disagreement and elaboration.

This distinction — which insists, as Katie does, that work in the humanities requires powers and dispositions that machines don’t possess and can’t appreciate (insight, irony) — provides the background for Ramsay’s attempt to sketch out the value of an “algorithmic criticism” for humanists. Science seeks results that can be experimentally “verified” or “falsified.” The humanities seek to keep a certain kind of conversation going.

We might add that science seeks to explain what is given by the world through the discovery of regular laws that govern that world, whereas the humanities seek to explain what it is like to be, and what it means to be, human in that world — as well as what humans themselves have added to it. To perform its job, science must do everything in its power to transcend the limits of human perspective; for the humanities, that perspective is unavoidable. As the philosopher Charles Taylor has put it, humans are “self-interpreting animals” — we are who we are partly in virtue of how we see ourselves. It would be pointless for us to understand what matters to us as humans from some neutral vantage outside the frame of human subjectivity and human concerns — “pointless” in the sense of “futile,” but also in the sense of “beside the point.” Sharpening our view of things from this vantage is precisely what the humanist is trying to do. If you tried to sharpen the view without simultaneously inhabiting it, you would have no way to gauge your own success.

The gray areas that are the inevitable territory of the English major, and in which Katie, as an exemplary English major, is happy to live, are — Ramsay is saying — the result of just this difference between science and the humanities. As a humanist himself, he’s happy there, too. He’s not suggesting that the humanities should take a black-and-white approach to literature. On the contrary, he insists repeatedly that texts contain no “cold, hard facts” because everything we see in them we see from some human viewpoint, from within some frame of reference; in fact, from within multiple, overlapping frames of reference.

Ramsay also warns repeatedly against the mistake of supposing that one could ever follow the methods of science to arrive at “verifiable” and “falsifiable” answers to the questions that literary criticism cares about.

What he does suggest, however, is that precisely because literary critics cast their explanations in terms of “patterns” rather than “laws,” the computer’s ability to execute certain kinds of algorithms and perform certain kinds of counting makes it ideally suited, in certain circumstances, to aid the critic in her or his task. “Patterns” of a certain kind are just what computers are good at turning up.

“Any reading of a text that is not a recapitulation of that text relies on a heuristic of radical transformation,” Ramsay writes. If your interpretation of Hamlet is to be anything other than a mere repetition of the words of Hamlet, it must re-cast Shakespeare’s play in other words. From that moment, it is no longer Hamlet, but from that moment, and not until that moment, understanding Hamlet becomes possible. “The critic who endeavors to put forth a ‘reading’ puts forth not the text, but a new text in which the data has been paraphrased, elaborated, selected, truncated, and transduced.”

There are many ways to do this. Ramsay’s point is merely that computers give us some new ones, and that the “radical transformation” produced by, for example, analyzing linguistic patterns in Woolf’s The Waves may take the conversation about the novel in some heretofore unexpected, and, at least for the moment, fruitful direction, making it richer, deeper, more complicated.

At a time when those of us in the humanities justly feel that what we do is undervalued in the culture at large, while what scientists do is reflexively celebrated (even as it is often poorly understood), there are, I believe, two mistakes we can make.

One is the mistake that Ramsay mentions: trying to make the humanities scientific, in the vain hope that doing so will persuade others to view what we do as important, useful, “practical.” (Katie identifies a version of this mistake in the presumption that robo-grading can provide a more “accurate” — that is, more scientific — assessment of students’ writing skills than humans can.)

But the other mistake would be to take up a defensive posture toward science, to treat the methods and aims of science as so utterly alien, if not hostile, to the humanities that we should guard ourselves against contamination by them and, whenever possible, proclaim from the rooftops our superiority to them. Katie doesn’t do this, but there are some in the humanities who do.

In a recent blogpost on The New Anti-Intellectualism, Andrew Piper calls out those humanists who seem to believe that “the world can be neatly partitioned into two kinds of thought, scientific and humanistic, quantitative and qualitative, remaking the history of ideas in the image of C.P. Snow’s two cultures.” It’s wrongheaded, he argues, to suppose that “Quantity is OK as long as it doesn’t touch those quintessentially human practices of art, culture, value, and meaning.”

Piper worries that “quantification today is tarnished with a host of evils. It is seen as a source of intellectual isolation (when academics use numbers they are alienating themselves from the public); a moral danger (when academics use numbers to understand things that shouldn’t be quantified they threaten to undo what matters most); and finally, quantification is just irrelevant.”

That view of quantification is dangerous and unfortunate, I think, not only because we need quantitative methods to help us make sense of such issues of pressing human concern as wealth inequality and climate change, but also because artists themselves measure sound, syllable, and space to take the measure of humanity and nature.

As Piper points out, “Quantity is part of that drama” of our quest for meaning about matters of human concern, of our deeply human “need to know ‘why.’”

Admin’s note: This post has been updated since its original appearance.